|

Multimedia

Labor

During

my PhD (2002-2006) my work was closely related with the MIP lab, which

is well equipped for

the animation analysis of humans. My goal was to make use of the whole

hardware and to integrate different subsystems, in a way that robust

and efficient analysis of a person moving in front of the screen

became possible. The integration included three

cameras for observing the

scene, 2 microphones for audio detection and issuing commands, 2

projectors for 3D-stereo visualization and 9 PCs for processing the

data and rendering.

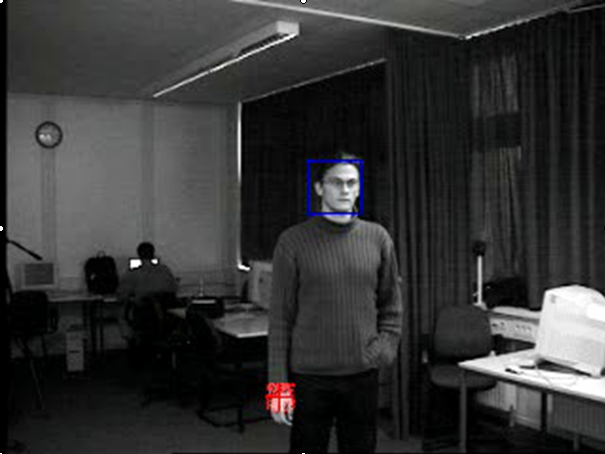

The movie (MPEG-1 9MB) on the right shows our person tracking and

multidisplay rendering system,

where 4 linux PCs are evaluating the person's head position and 5 PCs

are rendering the scene. The user's head position is computed and the

scene view is adapted according to the head's height. By walking around

on the floor, the person can navigate through the scene, standing in

front means forward, left means rotate left and so on. For good

visibility of the displays the scene has to be rather dim lighted,

which makes the image processing very difficult. A Baysian approach

using particle systems makes it possible in real-time though (see publications CAIP2005 ).

The

system is also used for public demonstrations. In this movie (25MB MPEG-1)

Prof. Koch first introduces the system. Later in the video, guests from

the

"Girl's Day" are enjoying the interaction themselves. The movie shows

also very well the difficulties we are facing in this environemnt.

|

|

An

overview of the interaction space (click for 9MB MPEG-1 movie)

|

|

Real-Time

Face Tracking

Two pan-tilt cameras track the user's face during

the interaction. The tracking is especcially difficult, becuse

the lighting is not only rather dim but changes dramatically during the

rendering, as the main light in the scene is emitted from the screens.

Therefore the color of light depends on the current visualized scene

part.

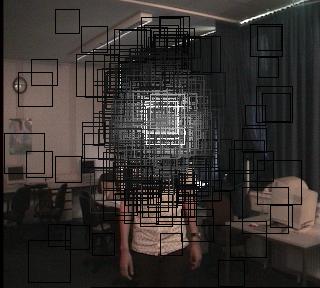

We achieve robust tracking with a CONDENSATION approach using face

color and a face detection/recognitin algorithm. The face color is

stored as a dynamic histogram. While the face detection is a adapted

version of a trained classifier cascade as available in

openCV. Our adaption enables a faster use within the

CONDENSATION. Results of the tracking can be seen in the right.

The image shows the sample distribution of the CONDENSATION particle

filter. The state/position of particles is here the image position and

the scale of the face. Brighter rectangles represent higher

probabilties. Visible in the movie is also the panning and tilting of

the camera. Please note that the camera does not move constantly,

but as seldomly as possible, such that the calculated projection

matrix of the cam stays valid as long as possible.

|

|

Sample

distribution of the CONDENSATION particle filter

(click for 5MB MPEG-1 movie)

|

|

Gesture

Tracking and Recognition

To enable real interaction with the virtual scene

pointing gestures are useful. Their detection and recogntion is

realized in our system by tracking of one hand again with a

CONDENSATION approach. Both pan/tilt cameras are used additionally for

tracking of the hand. Used features here are again skin color (taken

the same as the face) and movement. The movement is calulated as the

temporal image gradient over a few frames. The movement is a good

feature for hand detection, as people tend to move their hands always,

if they move at all (with respect to the global coordinate system).

In the movie the samples with a certain probability ae shown. The red

cross marks the estimated hand position, which is calculated as the

weighted mean of all sample positions. The state is here only 2D (the

image position of the hand. The size of the red cross shows the

uncertainty of the estimated position. This output of the particle

filter is very useful for sensor fusion and makes the system more

stable and robust.

The hand tracking integrated in the whole system can be seen in this movie(6MB).

The pointing ray of the person is calculated as the line extension

between head and hand. The intersection point with the virtual scene

can be calculated due to the fully calibrated system. However the

position estimate is rather noisy, as visible by observing the

yellow ball. This is due to the small amount of cameras used for

triangulation (only 2) and due to the blob like features taken (color

and movement).

|

|

Hand

and face tracking together (3MB movie)

|